Cousera机器学习基石第四周笔记-Machine-Learning-Foundation-Week-4-Note-in-Cousera

Feasibility of Learning

Learning is Impossible?

Probability to the Rescue

Inferring Something Unknow

in sampleout sample

Possible versus Probable

Hoeffding’s Inequality

In big sample(N large), is probably close to (within )

called Hoeffding’s Inequality, for marbles,coin,polling

the statement is probably approximately correct(PAC)

valid for all N and

does not depend on ,no need to know

larger sample size N or looser gap higher probability for

if large N,can probably infer unknown by know

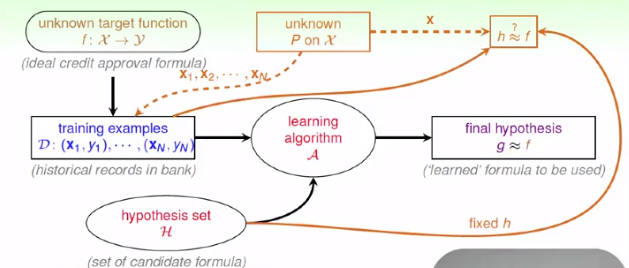

Connection to Learning

Added Components

for any fixed h, can probably infer unkown by known

The Formal Guarantee

if andwith respect to P

Verification of One h

if small for the fixed h and A pick the h as g g=f PAC

if A force to pick THE h as g almost always not small PAC

real learning:

A shall make choices\in \H (like PLA) rather than being forced to pick one h.

The ‘Verification’ Flow

Connection to Real Learning

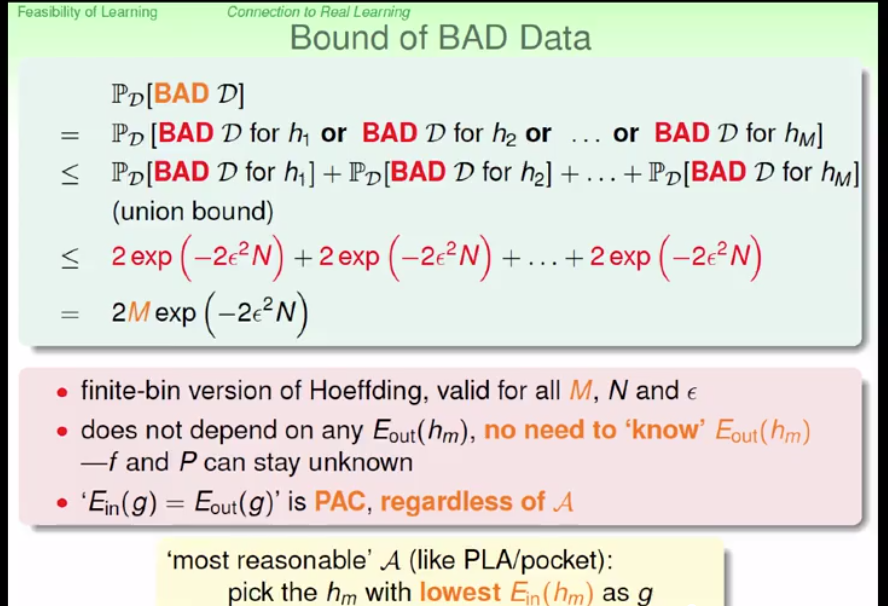

BAD Sample and BAD Data

BAD Sample:,but getting all heads()

BAD Data for One h: and far away

BAD data for Many h

BAD data for many h no freedom of choice by A there exists some h such that and far away

Bound of BAD Data

The Statistical Learning Flow

if = M finite, N large enough,for whatever g picked by A,

if A finds one g with ,PAC guarantee forlearning possible

M=? - see you in the next lectures~

吐槽

这个作业题是真的难啊,花了一个半小时才堪堪通过,尤其是最后几个写PLA和pocket算法的